|

| MORE DETAILS CLICK THE LINK http://www.tnou.ac.in/UG.pdf |

Tnou Study Center

Blog Owner: Vignesh A

Search This Blog

Thursday, December 27, 2012

Thursday, December 13, 2012

BCA 06 (Introduction to Data Base Management System) TNOU BCA First year Assignment 2012

BCA 06 (Introduction to Data Base Management System) TNOU BCA First year Assignment 2012

PART A

Short Answer Questions:

1.

What are the draw backs of commercial database?

Answer:-Existing commercial DBMS, both small and large has proven inadequate for these applications. The traditional database notion of storing data in two-dimensional tables, or in flat files, breaks down quickly in the face of complex data structures and data types used in today’s applications. Research in model and process complex data has gone in two directions:

a. Extending the functionality of RDBMS

b. Developing and implementing OODBMS that is based on object oriented programming paradigm.

The main elements of RDBMS are based on Ted Codd’s 13 rules for a relational system, the concept of relational integrity, and normalization. The three fundamentals of a relational database are that all information must be held in the form of a table, where all data are described using data values. The second fundamental is that each value found in the table columns does not repeat. The final fundamental is the use of Standard Query Language (SQL).

The language important to OODBMS is data definition and manipulation language (DDML). The use of this language allows persistent data to be created, updated, deleted, or retrieved. An OODBMS needs a computational versus a relational language because it can be used to avoid impedance mismatch. DDML allows users to define a database, including creating, altering, and dropping tables and establishing constraints. DDMLs are used to maintain and query a database, including updating, inserting, modifying, and querying data.

2.

(i) List factors which

motivate the move to acquire the DBMS approach?

Answer:

A Database Management System (DBMS)

approach to storing data is a smart move for a business to make. There are many

advantages to organizing your data in a database. These range from reducing

data redundancy to implementing data access controls. Not only can a company

benefit internally from using a DBMS, its clients can be confident in the

security of their personal information.

ii)What is the Difference between B-Tree and B+Tree ?

Difference between B and B+ tree :

B tree indices are similar to B+

tree indices. The primary distinction between the two approaches is that a

B-tree eliminates the redundant storage of search key values. Search keys are

not repeated in B tree indices.

Simple

B+ tree

Simple

B-Tree

Given below the major difference between B tree and B+ tree structure.

1.

In a B

tree search keys and data stored in internal or leaf nodes. But in B+-tree data

store only leaf nodes.

2.

Searching

of any data in a B+ tree is very easy because all data are found in leaf nodes

otherwise in a B tree data cannot found in leaf node.

3.

In B tree

data may found leaf or non leaf node. Deletion of non leaf node is very

complicated. Otherwise in a B+ tree data must found leaf node. So deletion is

easy in leaf node.

4.

Insertion

of a B tree is more complicated than B+ tree.

5.

B +tree

store redundant search key but b-tree has no redundant value.

6.

In B+ tree

leaf node data are ordered in a sequential linked list but in B tree the leaf

node cannot stored using linked list.

Many

database system implementers prefer the structural simplicity of a b+ tree.

3.

I) What are the Basic relational Algebra

Operations?

Answer:

Relational Algebra:

A query language is a language in which user requests information from the

database. it can be categorized as either procedural or nonprocedural. In a

procedural language the user instructs the system to do a sequence of

operations on database to compute the desired result. In nonprocedural language

the user describes the desired information without giving a specific procedure

for obtaining that information. Relational algebra is a collection of

operations used to manipulate relations (tables). These operations enable the

users to specify the retrieval requests which results in a new relation built

from one or more relations.

Relational

Algebra is a Procedural language, which specifies, the operations to be

performed on the existing relations to derive result relations. It is a

procedural language which means that user has to specify what is required and

what is the sequence of steps performed on the database to obtain the required

output. Whenever the operations are performed on the existing relations to

produce new relations then the original relations(s) are not effected i.e. they

remain the same, and the resultant relation obtained can act as an input to

some other operation, so relational algebra operations can be composed together

into a relational algebra expression. Composing relational algebra operation

into relational expression is similar to composing arithmetic operations (+, -,

*) into arithmetic expressions. R1+R2 is a relational expression where R1 and

R2 are relations.

Relational

Algebra is a formal and non-user friendly language. It illustrates the basic

operations required for any Data Manipulation languages but it is very less

commonly used in the commercial languages because it lacks the syntactic

details, although it acts as a fundamental technique for extracting data from

the database.

3( ii)Write Short notes on Client Server

Approach ?

Answer:

Client-server is just one approach to managing network applications The

primary alternative, peer-to-peer networking, models all devices as having

equivalent capability rather than specialized client or server roles. Compared

to client-server, peer to peer networks offer some advantages such as more

flexibility in growing the system to handle large number of clients.

Client-server networks generally offer advantages in keeping data secure.

The

client-server model was originally developed to allow more users to share

access to database applications. Compared to the mainframe approach,

client-server offers improved scalability because connections can be made as

needed rather than being fixed. The client-server model also supports modular

applications that can make the job of creating software easier. In so-called

"two-tier" and "three-tier" types of client-server systems,

software applications are separated into modular pieces, and each piece is

installed on clients or servers specialized for that subsystem.

Part B

Answer All the

Questions:

1.

What is Normalization ? Explain the Normalized Relations.

Answer:

The normalization is the process of

organizing the fields and tables of a relational database to minimize

redundancy and dependency. Normalization usually involves dividing large tables

into smaller (and less redundant) tables and defining relationships between

them. The objective is to isolate data so that additions, deletions, and

modifications of a field can be made in just one table and then propagated

through the rest of the database via the defined relationships.

Normalization of a data is a process

of analyzing the given relation schema based on their FDs and primary keys to

achieve the desirable properties of

- Minimizing redundancy

- Minimizing the insertion, deletion and update anomalies.

First Normal Form:

First Normal form imposes a very

basic requirement on relations; unlike the other normal forms, it does not

require additional information such as functional dependencies.

A domain is atomic if elements of the domain are considered to be indivisible units. We say that a relation schema R is in first normal form (1NF) if the domains of all attributes of R are atomic.

A domain is atomic if elements of the domain are considered to be indivisible units. We say that a relation schema R is in first normal form (1NF) if the domains of all attributes of R are atomic.

A set of names is an example of a

nonatomic value. For example, if the schema of a relation employee included an

attribute children whose domain elements are sets of names, the schema would

not be in first normal form.

Composite attributes, such as an

attribute address with component attributes street and city, also have

nonatomic domains.

Second Normal Form:

2NF is based on the concept of full

functional dependency.

A functional dependency X -> Y is

a full functional dependency if removal of any attribute A from X means that

the dependency does not hold any more.

A relational schema R is in 2NF every non prime attribute A in R is fully functionally dependent on the PK of R.

A relational schema R is in 2NF every non prime attribute A in R is fully functionally dependent on the PK of R.

The relation schema can be 2NF

normalized into a number of 2NF relations in which non-prime attributes are associated

only with the part of the PK on which they are fully functionally dependent.

Third Normal Form:

BCNF requires that all nontrivial

dependencies be of the form α -> ß, where α is a superkey. 3NF relaxes this

constraint slightly by allowing nontrivial functional dependencies whose left

side is not a superkey.

A relation schema R is in third

normal form (3NF) with respect to a set F of functional dependencies if, for

all functional dependencies in F+ of the form α -> ß.

- α -> ß is a trivial functional dependency.

- α is a superkey of R.

- Each attribute A in ß – α is contained in a candidate key for R.

BCA 05 (Elements of System Analysis and Design) TNOU BCA Assignment 2012

BCA 05 (Elements of System Analysis and Design) TNOU BCA Assignment 2012

Presence

First and foremost, a good analyst must be able to put a case across and get action as a result. They need to be taken seriously by the rest of the organization. This requires good communications skills and the ability to influence discussions and outcomes. Someone may be technically very good, but that's wasted if they can't get a message across.

When recruiting analysts in the past, a major part of the selection process was getting the candidate to deliver a data based presentation to a group of prospective colleagues and internal customers. If they couldn't get their point across clearly and concisely, they usually didn't make it through to the next stage.

Commercial Awareness

In my mind, a good analyst must be as commercially aware as the people they deal with in business. He or she must understand the business implications of their analysis and recommendations. They have to be close to the business and also have a firm grasp of the context in which the business operates. There's a difference between "insight" and "actionable insight."

Data Dexterity

Obviously, an analyst must be numerate and feel comfortable with numbers. I also think a good analysts needs to have "data dexterity." By this I mean the analyst has to feel comfortable working with large volumes of data from disparate data sources, and to be able to spot relevant patterns and trends. This is different to being numerate. Producing an analysis is also different from producing a report.

Another key skill is the ability to spot when data are blatantly wrong and "just doen't look right." To often, I've seen analysts come a cropper (define) when they make recommendations based on what was obviously dirty data.

Attention to Detail

A good analyst must be able to both think strategically, but also at low levels of detail. The devil is in the details, and often there are problems or issues to be addressed that require close attention to the detail. Above all, the analysts must be suspicious and question things that don't look right. A simple mistake can prove very costly down the line.

Having said all this, a good analyst should be technically competent but that, in a way, is a hygiene factor. The above list, are the qualities that enable you to separate the winners from the also-rans. It's an optimistic set of requirements, but someone with the right temperament and attitude can be trained in some of these areas. Look for the fertile ground.

The next generation of Web analysts may be not necessarily be where you expect them to be. They may be in related or even quite separate functions or industries. But if they have the right qualities and attributes, then they're worth tracking down.

PART

A

Answer all Question

- What is the basic difference between System approach and System Analysis ?

Answer:

System Approach:

In our system approach, we will

also use the same concept to break and design the software systems. You will

first identify the granular/ leaf level functions of the system (Tasks of the

system) and will group them into classes, where each class is responsible for

similar types of operations. These classes are pretty much similar to workers

of the above diagram. As the number of workers of the organization increases,

you add managers to manage the worker group. The same will be done in the

software system too. Again as the number of managers grows, that group will be

pushed down and a few senior managers will be introduced to the top to control

them. However during this process, you need to carefully group classes of the

system to form modules as well. The number of managers and the depth of the

manager pool inside a module can be decided by the complexity of the module (or

the number of worker classes loaded or grouped into the module). These modules

will be treated as separate divisions of an organization, where each module

will be controlled by one or more managers, positioned considering the

complexity of the system. These module controlling managers will help modules

interact with each other.

System Analysis:

- Signals that are continuous in time and continuous in value are known as analog signals.

- Signals that are discrete in time and discrete in value are known as digital signals.

- Signals that are discrete in time and continuous in value are called discrete-time signals. While important mathematically, systems that process discrete time signals are difficult to physically realize. The methods developed for analyzing discrete time signals and systems are usually applied to digital and analog signals and systems.

- Signals that are continuous in time and discrete in value are sometimes seen in the timing analysis of logic circuits, but have little to no use in system analysis.

- How to Define Cost Benefit analysis ?

Answer:

Cost-benefit analysis is used to

determine the economic feasibility of a project. The total expected costs are

weighed against the total expected benefits. If the benefits outweigh the costs

over a given period of time, the project may be considered to be financially

viable. The costs involved with a software development project will consist of

the initial development cost (the costs incurred up to the point where the new

system becomes operational), and the operating costs of the system throughout

its expected useful lifetime (usually a period of five years). The expectation

is that at some point in the system's lifetime, the accumulated financial

benefits of the system will exceed the cost of development and the ongoing

operating costs. This point in time is usually referred to as the break-even point.

The benefits of the new system are

usually considered to be the tangible financial benefits engendered by the system.

These could be manifested as reduced operating costs, increased revenue, or a

combination of the two. In some cases there may be one or more less tangible

benefits (i.e. benefits that cannot be measured in financial terms), but such

benefits are difficult to assess. Indeed the accuracy of a cost benefit

analysis is dependent on the accuracy with which the development costs,

operational costs and future benefits of the system can be estimated, and its

outcome should always be treated with caution.

- What are the basic rules for CRT-input Display Screens ?

Answer:

The

cathode ray tube (CRT) is a vacuum tube containing an electron gun

(a source of electrons or electron emitter) and a fluorescent

screen used to view images. It has a means to accelerate and deflect the

electron beam onto the fluorescent screen to create the images. The image may

represent electrical waveforms (oscilloscope),

pictures (television,

computer

monitor), radar

targets and others

Many

online data entry devices are CRT screens that provide instant visual

verification of input data and a means of prompting the operator. Operator can

make any changes desired before the data go to the system processing. A CRT

screen is actually a display station that has a b (memory) for storing data. A

common size display is 24 rows of 80 characters each or 1,920 characters.

There

are two approaches to designing data on CRT screens: in. and software utility

methods. The manual method uses a work sheet m like a print layout chart. The

menu or data to be displayed are blocked in the areas reserved on the chart and

then they are incorporated into system to formalize data entry. For example, in

the first command in the partial program is interpreted by system as follows:

"Go to row 10 and column 10 on the screen and display (SAY) the statement

typed between quotes." The same applies to the three commands. The command

"WAIT TO A" tells the system to keep menu on the screen until the

operator types the option next to the word.

"WAITING."

The

main objective of screen display design is simplicity for accurate and quick

data capture or entry. Other guidelines are:

1. Use the same format throughout the project.

2. Allow ample 3pace for the data. Overcrowding causes eye strain and may tax

the interest of the user.

3.Use easy-to-Iearn and consistent terms, such as "add," "delete,'

and "create."

- Provide help or tutorial for technical terms or procedures.

PART B

ANSWER THE FOLLOWING:

- What are the attributes of Good Analyst ?

Answer :

A

system analyst has a range of functions, including research of problems,

planning of solutions, recommendation of systems and software and coordination

of developments necessary to meet business and other requirements.

In addition, they act as a liaison between vendors, IT professionals and end users, as well as often being responsible for design considerations, translation of user specific requests into technical specifications, cost analysis and development, as well as implementation time lines.

Typical problems solved by system analysts include cost-efficiency of inventory holding, evaluation of customer needs, such as ordering times, etc, and efficient analysis of financial information.

In addition, they act as a liaison between vendors, IT professionals and end users, as well as often being responsible for design considerations, translation of user specific requests into technical specifications, cost analysis and development, as well as implementation time lines.

Typical problems solved by system analysts include cost-efficiency of inventory holding, evaluation of customer needs, such as ordering times, etc, and efficient analysis of financial information.

- Necessary

Skills

As well as requiring technical skills and knowledge, including knowledge of hardware, software and a whole range of techniques involved in system design, planning, programming, development, testing and application, a system analysts also has to have business skills, including knowing about organizational structures, and management techniques; traditions, plans, strategies and values of an organization and functional work processes.

People skills, including an understanding of how people learn and think, work, respond to change and communicate. Communication and interpersonal skills are, in fact, crucial to obtain information, motivate other individuals and achieve cooperation, as well as understanding the complex processes within an organization if necessary support is to be provided.

- Qualities

and Characteristics

A good system analyst should consequently have excellent knowledge and skills of techniques and tools used within technology, business and people, as well as an ability to adjust to and comprehend constantly advancing technology and business methods.

A good system analyst should be a logical and critical thinker who is inquisitive, patient and persevering, methodical and broad minded. In addition, he must be able to communicate with a variety of individuals from varying backgrounds in order to achieve and motivate, as well as maintain cooperation between all parties involved.

Presence

First and foremost, a good analyst must be able to put a case across and get action as a result. They need to be taken seriously by the rest of the organization. This requires good communications skills and the ability to influence discussions and outcomes. Someone may be technically very good, but that's wasted if they can't get a message across.

When recruiting analysts in the past, a major part of the selection process was getting the candidate to deliver a data based presentation to a group of prospective colleagues and internal customers. If they couldn't get their point across clearly and concisely, they usually didn't make it through to the next stage.

Commercial Awareness

In my mind, a good analyst must be as commercially aware as the people they deal with in business. He or she must understand the business implications of their analysis and recommendations. They have to be close to the business and also have a firm grasp of the context in which the business operates. There's a difference between "insight" and "actionable insight."

Data Dexterity

Obviously, an analyst must be numerate and feel comfortable with numbers. I also think a good analysts needs to have "data dexterity." By this I mean the analyst has to feel comfortable working with large volumes of data from disparate data sources, and to be able to spot relevant patterns and trends. This is different to being numerate. Producing an analysis is also different from producing a report.

Another key skill is the ability to spot when data are blatantly wrong and "just doen't look right." To often, I've seen analysts come a cropper (define) when they make recommendations based on what was obviously dirty data.

Attention to Detail

A good analyst must be able to both think strategically, but also at low levels of detail. The devil is in the details, and often there are problems or issues to be addressed that require close attention to the detail. Above all, the analysts must be suspicious and question things that don't look right. A simple mistake can prove very costly down the line.

Having said all this, a good analyst should be technically competent but that, in a way, is a hygiene factor. The above list, are the qualities that enable you to separate the winners from the also-rans. It's an optimistic set of requirements, but someone with the right temperament and attitude can be trained in some of these areas. Look for the fertile ground.

The next generation of Web analysts may be not necessarily be where you expect them to be. They may be in related or even quite separate functions or industries. But if they have the right qualities and attributes, then they're worth tracking down.

Sunday, December 9, 2012

BCA 01 (Computer Fundamentals and Pc Software) TNOU first year Assignment 2012 batch

BCA 01 (Computer Fundamentals and

Pc Software)

Answer

all the Question (3 x5 =15) (Each answer will be 150 Words)

1.

Explain the futures of Multiprogramming Operating System

Answer:

The

multiprogramming operating system.

The multiprogramming operating system can execute several jobs concurrently by switching the attention of the CPU back and forth among them. This switching is usually prompted by a relative slow input, output storage request that can be handled by a buffer, spooler or channel freeing the CPU to continue processing .

The multiprogramming operating system can execute several jobs concurrently by switching the attention of the CPU back and forth among them. This switching is usually prompted by a relative slow input, output storage request that can be handled by a buffer, spooler or channel freeing the CPU to continue processing .

Multiprogramming

makes efficient use of the CPU by overlapping the demands for the CPU and its

I/O devices from various users. It attempts to increase CPU utilization by

always having something for the CPU to execute.

The primary reason multiprogramming operating system was developed and the reason they are popular, is that they enable the CPU to be utilized more efficiently. If the operating system can quickly switch the CPU to another task whenever the being worked in requires relatively slow input, output or storage operations, then CPU is not allowed to stand idle.

Future of multiprogramming operating system:

i) It increases CPU utilization.

ii) It decreases total read time needed to execute a job.

iii) It maximizes the total job throughput of a computer.

The primary reason multiprogramming operating system was developed and the reason they are popular, is that they enable the CPU to be utilized more efficiently. If the operating system can quickly switch the CPU to another task whenever the being worked in requires relatively slow input, output or storage operations, then CPU is not allowed to stand idle.

Future of multiprogramming operating system:

i) It increases CPU utilization.

ii) It decreases total read time needed to execute a job.

iii) It maximizes the total job throughput of a computer.

Example: three jobs are submitted

Almost no contention for resources

All 3 can run in minimum time in a multitasking environment (assuming JOB2/3 have enough CPU time to keep their I/O operations active)

Almost no contention for resources

All 3 can run in minimum time in a multitasking environment (assuming JOB2/3 have enough CPU time to keep their I/O operations active)

____________________________________________________________________________________

2.

(i) WHAT IS GUI ?

A graphical user interface (GUI) is a human-computer interface

(i.e., a way for humans to interact with computers) that uses windows, icons

and menus and which can be manipulated by a mouse (and often to a

limited extent by a keyboard as well). GUIs stand in sharp contrast to command line interfaces (CLIs), which use only text and are accessed solely by a keyboard. The most familiar example of a CLI to many people is MS-DOS. Another example is Linux when it is used in console mode (i.e., the entire screen shows text only).

A window is a (usually) rectangular portion of the monitor screen that can display its contents (EXAMPLE: a program, icons, a text file or an image) seemingly independently of the rest of the display screen. A major feature is the ability for multiple windows to be open simultaneously. Each window can display a different application, or each can display different files (e.g., text, image or spreadsheet files) that have been opened or created with a single application.

An icon is a small picture or symbol in a GUI that represents a program (or command), a file, a directory or a device (such as a hard disk or floppy). Icons are used both on the desktop and within application programs. Examples include small rectangles (to represent files), file folders (to represent directories), a trash can (to indicate a place to dispose of unwanted files and directories) and buttons on web browsers (for navigating to previous pages, for reloading the current page, etc.).

Commands are issued in the GUI by using

a mouse, trackball or touchpad to first move a pointer on the screen to, or on

top of, the icon, menu item or window of interest in order to select

that object. Then, for example, icons and windows can be moved by dragging

(moving the mouse with the held down) and objects or programs can be opened by

clicking on their icons.

2.(II)

Explain the Classification of Viruses

Answer:

Computer Viruses are classified according to their

nature of infection and behavior. Different types of computer virus

classification are given below. • Boot Sector Virus: A Boot Sector Virus infects the first sector of the hard drive, where the Master Boot Record (MBR) is stored. The Master Boot Record (MBR) stores the disk's primary partition table and to store bootstrapping instructions which are executed after the computer's BIOS passes execution to machine code. If a computer is infected with Boot Sector Virus, when the computer is turned on, the virus launches immediately and is loaded into memory, enabling it to control the computer.

• File Deleting Viruses: A File Deleting Virus is designed to delete critical files which are the part of Operating System or data files.

• Mass Mailer Viruses: Mass Mailer Viruses search e-mail programs like MS outlook for e-mail addresses which are stored in the address book and replicate by e-mailing themselves to the addresses stored in the address book of the e-mail program.

• Macro viruses: Macro viruses are written by using the Macro programming languages like VBA, which is a feature of MS office package. A macro is a way to automate and simplify a task that you perform repeatedly in MS office suit (MS Excel, MS word etc). These macros are usually stored as part of the document or spreadsheet and can travel to other systems when these files are transferred to another computers.

• Polymorphic Viruses: Polymorphic Viruses have the capability to change their appearance and change their code every time they infect a different system. This helps the Polymorphic Viruses to hide from anti-virus software.

• Armored Viruses: Armored Viruses are type of viruses that are designed and written to make itself difficult to detect or analyze. An Armored Virus may also have the ability to protect itself from antivirus programs, making it more difficult to disinfect.

• Stealth viruses: Stealth viruses have the capability to hide from operating system or anti-virus software by making changes to file sizes or directory structure. Stealth viruses are anti-heuristic nature which helps them to hide from heuristic detection.

• Polymorphic Viruses: Polymorphic viruses change their form in order to avoid detection and disinfection by anti-virus applications. After the work, these types of viruses try to hide from the anti-virus application by encrypting parts of the virus itself. This is known as mutation.

• Retrovirus: Retrovirus is another type virus which tries to attack and disable the anti-virus application running on the computer. A retrovirus can be considered anti-antivirus. Some Retroviruses attack the anti-virus application and stop it from running or some other destroys the virus definition database.

• Multiple Characteristic

viruses: Multiple Characteristic viruses has different characteristics

of viruses and have different capabilities.

3.

I) write Short notes on Mail Merge ?

Answer:

A mail merge is a method of taking data from a database, spreadsheet, or

other form of structured data, and inserting it into documents such as letters,

mailing labels, and name tags. It usually requires two files, one storing the

variable data to be inserted, and the other containing both the instructions

for formatting the variable data and the information that will be identical

across each result of the mail merge.For example, in a form letter, you might include instructions to insert the name of each recipient in a certain place; the mail merge would combine this letter with a list of recipients to produce one letter for each person in the list.

You can also print a set of mailing labels or envelopes by doing a mail merge. For labels, for example, you would construct a source document containing the addresses of the people you wish to print labels for and a main document that controls where each person's name, address, city, state, and zip code will go on the label. The main document would also contain information about how many labels are on a page, the size of each label, the size of the sheet of paper the labels are attached to, and the type of printer you will use to print the labels. Running a mail merge with the two files results in a set of labels, one for each entry in the source document, with each label formatted according to the information in the main document.

Most major word processing packages

(e.g., Microsoft Word) are capable of performing a mail merge.

Answer the Following: (1 X10)

With 300 Words

1.Explain the Network

Architecture of OSI reference Model ?

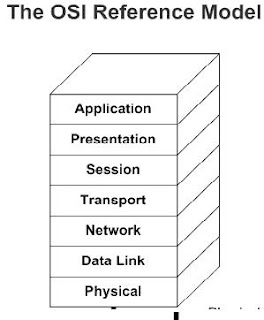

The

open systems interconnection (OSI)

model is a product of the open systems interconnection effort at the

international organization for standardisation.

OSI - An

architectural model for open networking systems that was developed by the

International Organization for Standardization (ISO) in Europe in 1974. The

Open Systems Interconnection (OSI) reference model was intended as a basis for

developing universally accepted networking protocols, but this initiative

essentially failed for the following reasons:

- The standards process was relatively closed compared with the open standards process used by the Internet Engineering Task Force (IETF) to develop the TCP/IP protocol suite.

- The model was overly complex. Some functions (such as connectionless communication) were neglected, while others (such as error correction and flow control) were repeated at several layers.

- The growth of the Internet and TCP/IP—a simpler, real-world protocol model—pushed the OSI reference model out.

The

OSI reference model is best seen as an idealized model of the logical

connections that must occur in order for network communication to take place.

Most protocol suites used in the real world, such as TCP/IP, DECnet, and

Systems Network Architecture (SNA), map somewhat loosely to the OSI reference

model. The OSI model is a good starting point for understanding how various

protocols within a protocol suite function and interact.

The

OSI reference model has seven logical layers, as shown in the following table.

It

is a characterising and standardizing the functions of communications system in

terms of abstraction layers.

The following figure shows the network

architecture of OSI reference model.

|

Layer Name

|

Description

|

|

|

7

|

Application layer

|

Interfaces user applications with

network functionality, controls how applications access the network, and

generates error messages. Protocols at this level include HTTP, FTP, SMTP,

and NFS.

|

|

6

|

Presentation layer

|

Translates data to be transmitted by

applications into a format suitable for transport over the network.

Redirector software, such as the Workstation service for Microsoft Windows

NT, is located at this level. Network shells are also defined at this layer.

|

|

5

|

Session layer

|

Defines how connections can be

established, maintained, and terminated. Also performs name resolution

functions.

|

|

4

|

Transport layer

|

Sequences packets so that they can be

reassembled at the destination in the proper order. Generates acknowledgments

and retransmits packets. Assembles packets after they are received.

|

|

3

|

Network layer

|

Defines logical host addresses such as

IP addresses, creates packet headers, and routes packets across an

internetwork using routers and Layer 3 switches. Strips the headers from the

packets at the receiving end.

|

|

2

|

Data-link layer

|

Specifies how data bits are grouped

into frames, and specifies frame formats. Responsible for error correction,

flow control, hardware addressing (such as MAC addresses), and how devices

such as hubs, bridges, repeaters, and Layer 2 switches operate., the logical

link control (LLC) layer and the media access control (MAC) layer.

|

|

1

|

Physical layer

|

Defines network transmission media,

signaling methods, bit synchronization, architecture (such as Ethernet or

Token Ring), and cabling topologies. Defines how network interface cards

(NICs) interact with the media (cabling).

|

Subscribe to:

Posts (Atom)